It was just a week ago when the internet had a small heart attack. Then, most of the netizens worldwide were blaming Cloudflare, and they had the right to do so, but the issue was a bit more complicated.

A small company in Northern Pennsylvania became a preferred path of many Internet routes through Verizon (AS701), a major Internet transit provider. This was the equivalent of Waze routing an entire freeway down a neighborhood street — resulting in many websites on Cloudflare, and many other providers, to be unavailable from large parts of the Internet. Cloudflare states that this should never have happened because Verizon should never have forwarded those routes to the rest of the Internet.

Cloudflare

However, to be fair, these issues are quite common. Humans make mistakes, but as long as we learn from them, we should be good for the future.

And with that been said, yesterday it happened again. But this time, Cloudflare was indeed the one to be blamed. Bad software deploy, to be exact. Read on for the full update on the issue from the Cloudflare team:

This is a short placeholder blog and will be replaced with a full post-mortem and disclosure of what happened for about 30 minutes yesterday July 2nd

This was not an attack (as some have speculated) and we are incredibly sorry that this incident occurred. Internal teams are meeting as I write performing a full post-mortem to understand how this occurred and how we prevent this from ever occurring again.

Update at 2009 UTC:

Starting at 1342 UTC today we experienced a global outage across our network that resulted in visitors to Cloudflare-proxied domains being shown 502 errors (“Bad Gateway”). The cause of this outage was deployment of a single misconfigured rule within the Cloudflare Web Application Firewall (WAF) during a routine deployment of new Cloudflare WAF Managed rules.

The intent of these new rules was to improve the blocking of inline JavaScript that is used in attacks. These rules were being deployed in a simulated mode where issues are identified and logged by the new rule but no customer traffic is actually blocked so that we can measure false positive rates and ensure that the new rules do not cause problems when they are deployed into full production.

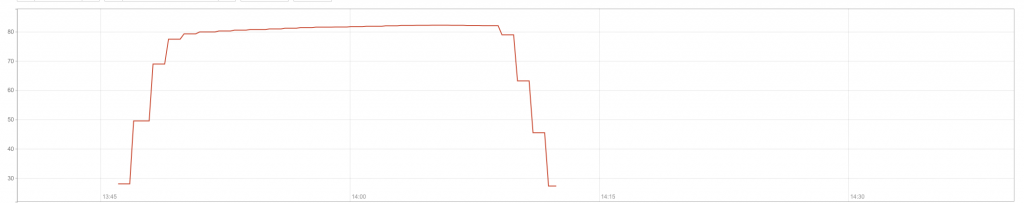

Unfortunately, one of these rules contained a regular expression that caused CPU to spike to 100% on our machines worldwide. This 100% CPU spike caused the 502 errors that our customers saw. At its worst traffic dropped by 82%.

This chart shows CPU spiking in one of our PoPs:

We were seeing an unprecedented CPU exhaustion event, which was novel for us as we had not experienced global CPU exhaustion before.

We make software deployments constantly across the network and have automated systems to run test suites and a procedure for deploying progressively to prevent incidents. Unfortunately, these WAF rules were deployed globally in one go and caused today’s outage.

At 1402 UTC we understood what was happening and decided to issue a ‘global kill’ on the WAF Managed Rulesets, which instantly dropped CPU back to normal and restored traffic. That occurred at 1409 UTC.

We then went on to review the offending pull request, roll back the specific rules, test the change to ensure that we were 100% certain that we had the correct fix, and re-enabled the WAF Managed Rulesets at 1452 UTC.

We recognize that an incident like this is very painful for our customers. Our testing processes were insufficient in this case and we are reviewing and making changes to our testing and deployment process to avoid incidents like this in the future.